Public service announcement regarding websocket timeouts.

TL;DR Websockets close after 1 minute of inactivity

So I have been plagued by a connection issue for the last month and decided to track down the problem.

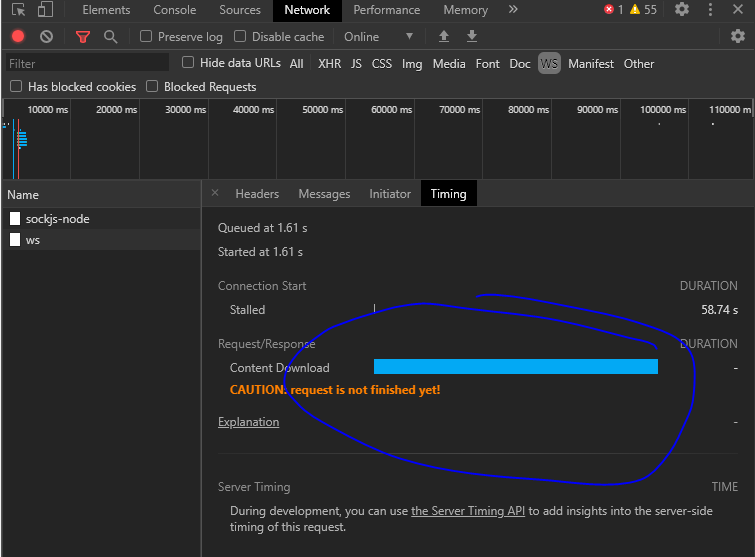

I was seeing this in chrome dev tools

after 1 minute the blue line goes away and it reports Content Downloaded it took me a while to figure out that basically means the websocket connection closed.

My session managment scheme was to refresh the token every 4 minutes which also refreshes the websocket connection, the problem with this interval is that after 1 minute and before the 4 minute refresh the connection is closed; I would get access denied problems because the connection would close and resgate would drop the connection id authentication information.

there are at least three ways to solve this.

-

implement a ping to keep the connection open. You can do this by simply calling a ping or heartbeat method on resgate, (might be worth putting this in the protocol)

-

in my case I just adjusted my token refresh from 4 minutes down to 58 seconds which works for now. I might implement a heartbeat as we scale if I start to notice performance issues.

-

use the client.setOnConnect method to refresh the connection authentication information. this didnt work for me because I set aggressive token timeouts, they expire after 5 minutes if they arent used. so if someone waited 6 minutes to do anything on the site it would log them out onConnect, so I opted for the interval refresh to keep the token alive. which now expires after 1 minute which is probably better for security anyways.

anyways I thought I would put out a PSA just in case anyone else runs into this and doesn’t want to waste time trying to figure it out